Introduction

You can follow this tutorial and learn how to deploy a Production ready Kubernetes cluster on CentOS 8 Linux.

Prerequisites

Following are the prerequisites when you want to deploy a Kubernetes cluster.

Prepare your environment

You can setup Kubernetes cluster on bare metal, virtual machines or on any cloud platforms such as AWS, Azure, GCP and so on.

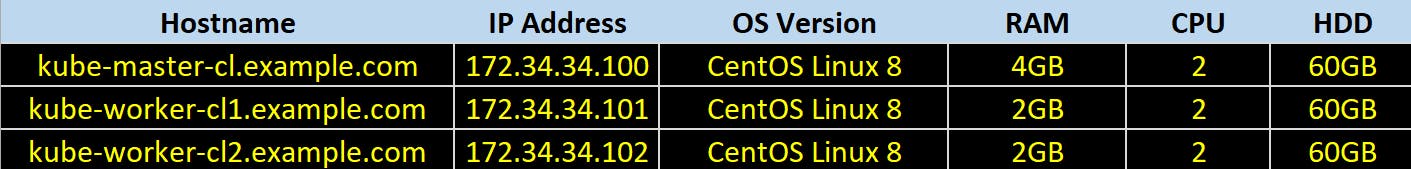

Here I have 3 CentOS Linux 8 VM's running with following configuration:

Configure hostnames correctly on all the nodes

In case you don’t have your DNS server configured then update /etc/hosts file on master and all the worker nodes.

For your reference I will paste my /etc/hosts file content from the master node.

root@kube-master-cl:~# cat /etc/hosts

127.0.0.1 localhost

172.34.34.100 kube-master-cl.example.com kube-master-cl

172.34.34.101 kube-worker-cl1.example.com kube-worker-cl1

172.34.34.102 kube-worker-cl2.example.com kube-worker-cl2

root@kube-master-cl:~#

Turn the swap off on all the nodes

You would ask that why we need to turn off the swap on Kubernetes nodes ?

The answer is because Kubernetes scheduler determines the best available node on which to deploy newly created pods. If memory swapping is allowed to occur on a host system, this can lead to performance and stability issues within Kubernetes. For this reason, Kubernetes requires that you disable swap in the host system.

swapoff -a && sed -i '/ swap / s/^/#/' /etc/fstab

You need to run this on all the nodes (Master + Workers)

Disable SELINUX

Disabling SELINUX is required as Kubernetes pod networks and other services requires to allow containers to access the host filesystem.

setenforce 0

sed -i 's/#\?\(SELINUX\=\).*$/\1disabled/' /etc/sysconfig/selinux

reboot

You need to run this on all the nodes (Master + Workers)

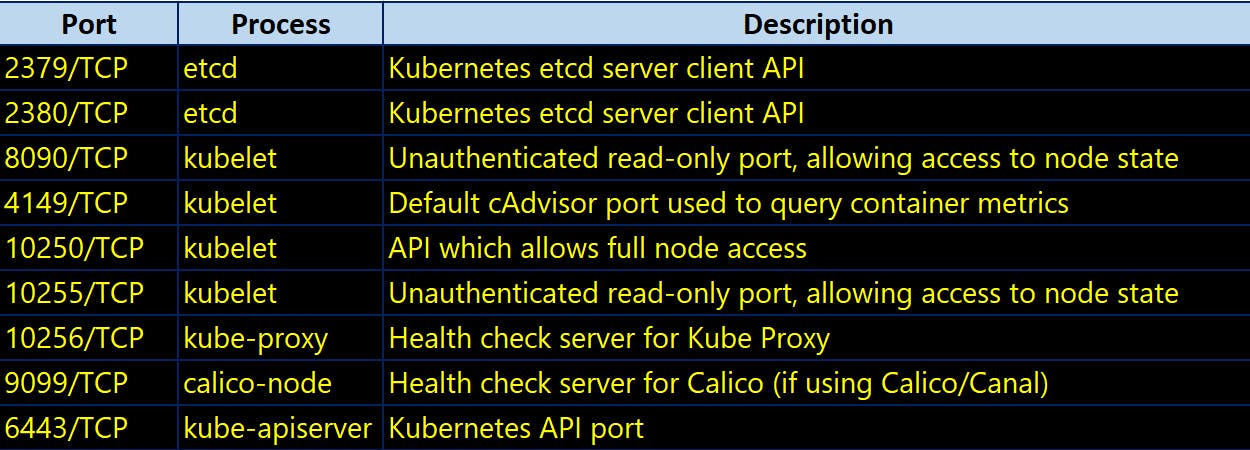

Enable Firewall rules

By default there is no blocking firewall rules configured at host level. Here is the common ports list:

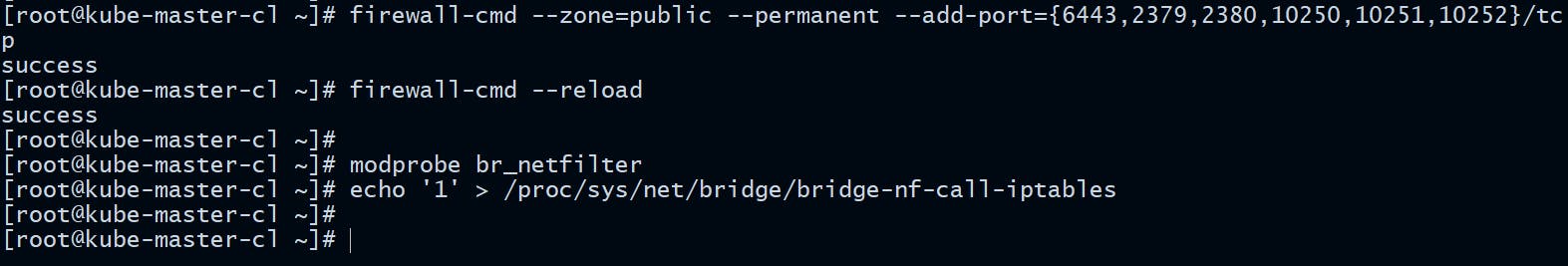

Configure the following firewall rules on the Master node.

firewall-cmd --zone=public --permanent --add-port={6443,2379,2380,10250,10251,10252}/tcp

firewall-cmd --reload

firewall-cmd --list-ports

modprobe br_netfilter

echo '1' > /proc/sys/net/bridge/bridge-nf-call-iptables

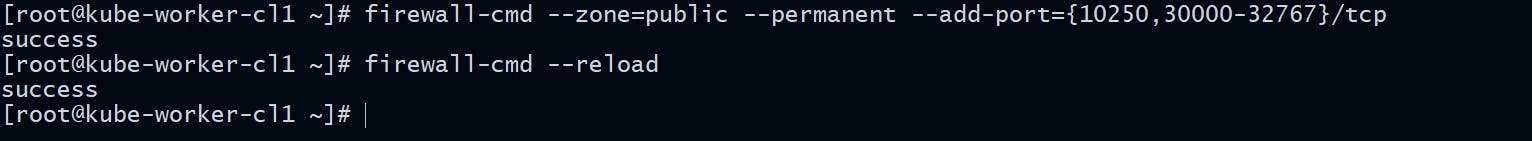

On Worker Nodes run the following command.

firewall-cmd --zone=public --permanent --add-port={10250,30000-32767}/tcp

firewall-cmd --reload

firewall-cmd --list-ports

For more details go here.

Begin the cluster deployment

Once you are done with configurations part of prerequisites follow the steps below one after another.

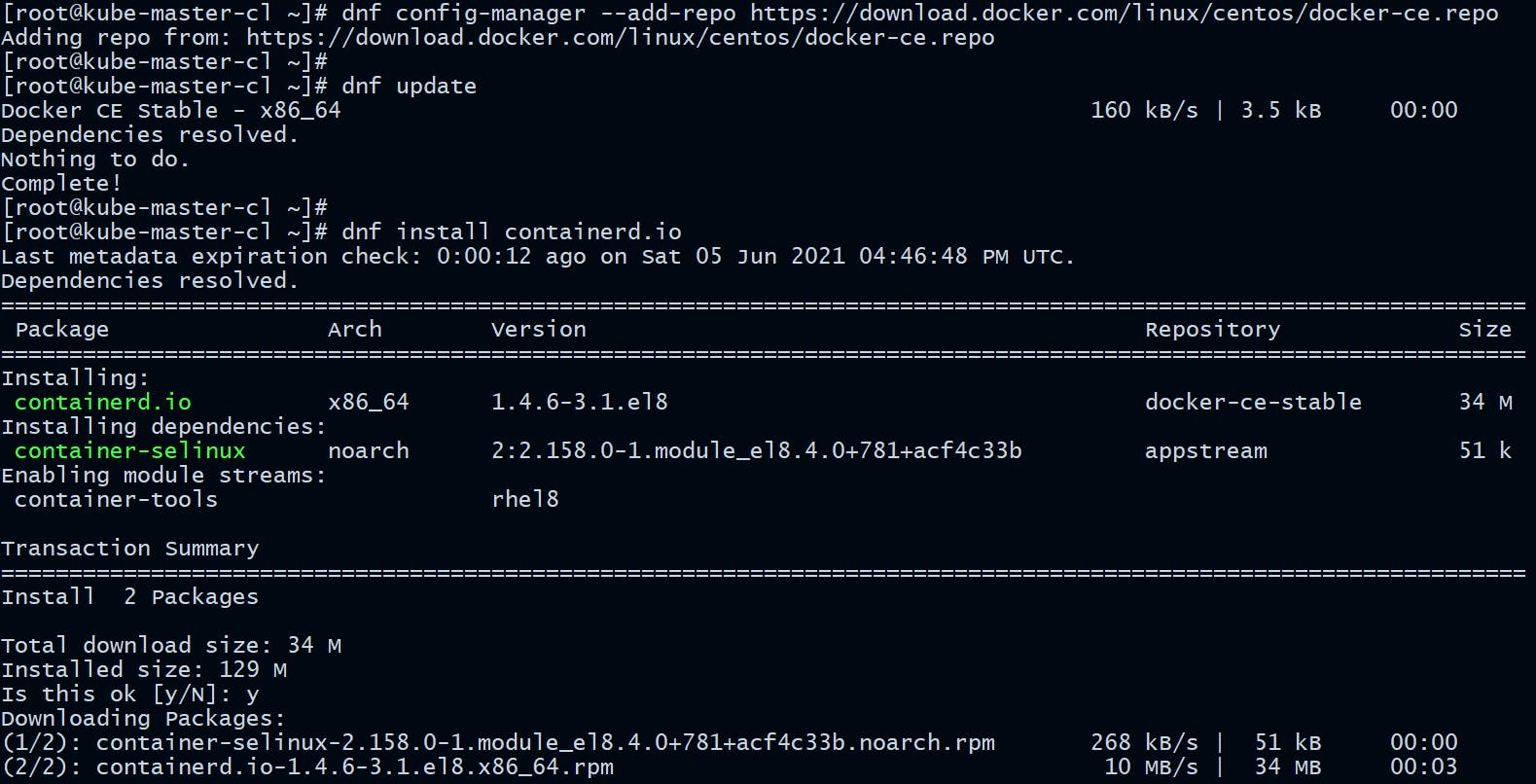

STEP 1: Install docker-ce and containerd

Kubernetes requires container runtime installed on each node part of the cluster which can handle the process of running containers on a machine. Container runtime is the software responsible for running containers.

There are several common container runtimes we can use such as:

- containerd

- CRI-O

- Docker

Kubernetes recently deprecated docker support starting from version 1.20 and will continue to use other container runtimes. But Docker is still the preferable tool to develop and build container images and running them locally.

This step needs to be performed on the Master Node, and each Worker Node.

dnf config-manager --add-repo=https://download.docker.com/linux/centos/docker-ce.repo

dnf install containerd.io

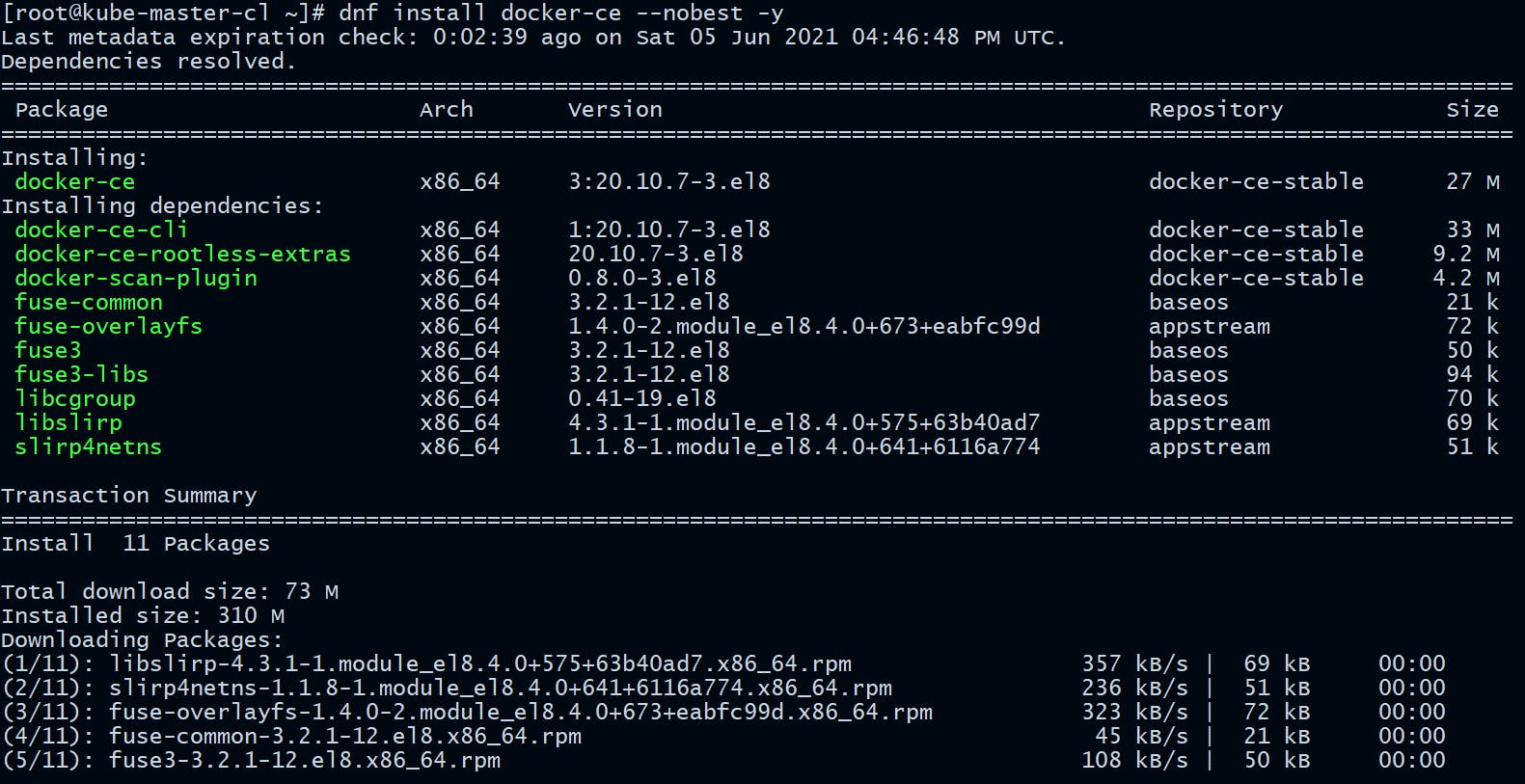

Now install docker-ce.

dnf install docker-ce --nobest -y

Verify the docker client and server version.

[root@kube-master-cl ~]# docker version

Client: Docker Engine - Community

Version: 20.10.7

API version: 1.41

Go version: go1.13.15

Git commit: f0df350

Built: Wed Jun 2 11:56:24 2021

OS/Arch: linux/amd64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 20.10.7

API version: 1.41 (minimum version 1.12)

Go version: go1.13.15

Git commit: b0f5bc3

Built: Wed Jun 2 11:54:48 2021

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.4.6

GitCommit: d71fcd7d8303cbf684402823e425e9dd2e99285d

runc:

Version: 1.0.0-rc95

GitCommit: b9ee9c6314599f1b4a7f497e1f1f856fe433d3b7

docker-init:

Version: 0.19.0

GitCommit: de40ad0

Now enable and start docker service.

systemctl enable docker

systemctl start docker

You need to run this on all the nodes (Master + Workers)

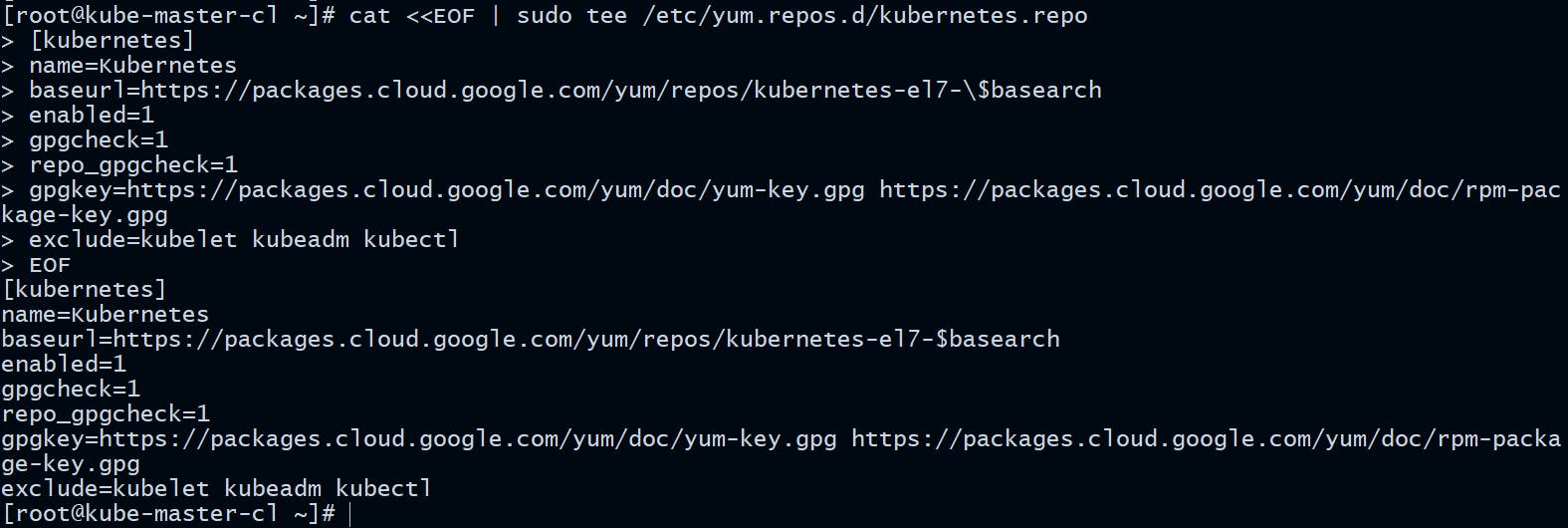

STEP 2: Configure Kubernetes Repository

Add Kubernetes official repository and gpg keys:

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

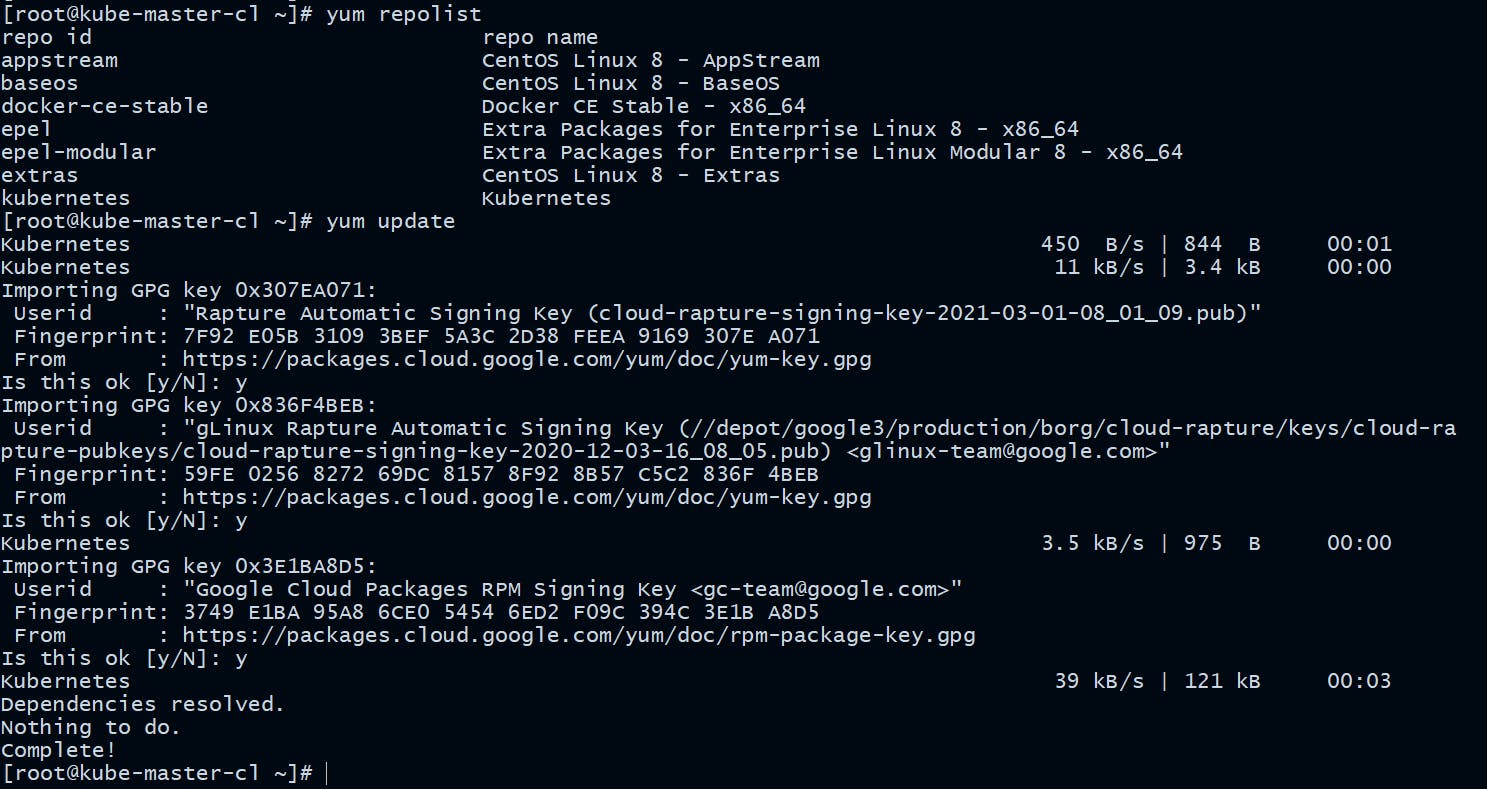

Update the Repo package indexes.

yum repolist

yum update

You need to run this on all the nodes (Master + Workers)

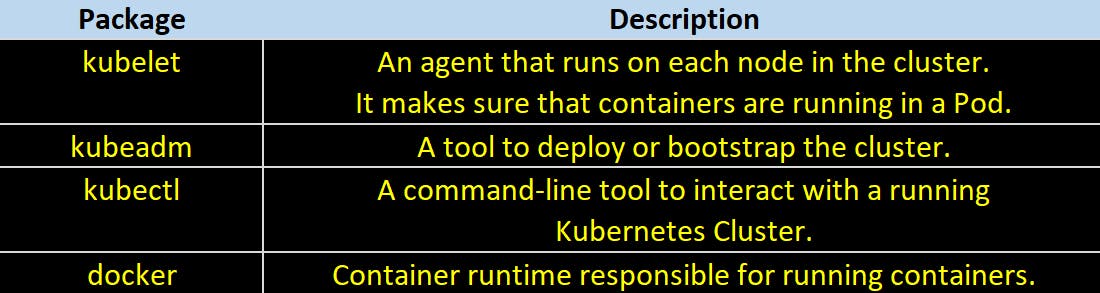

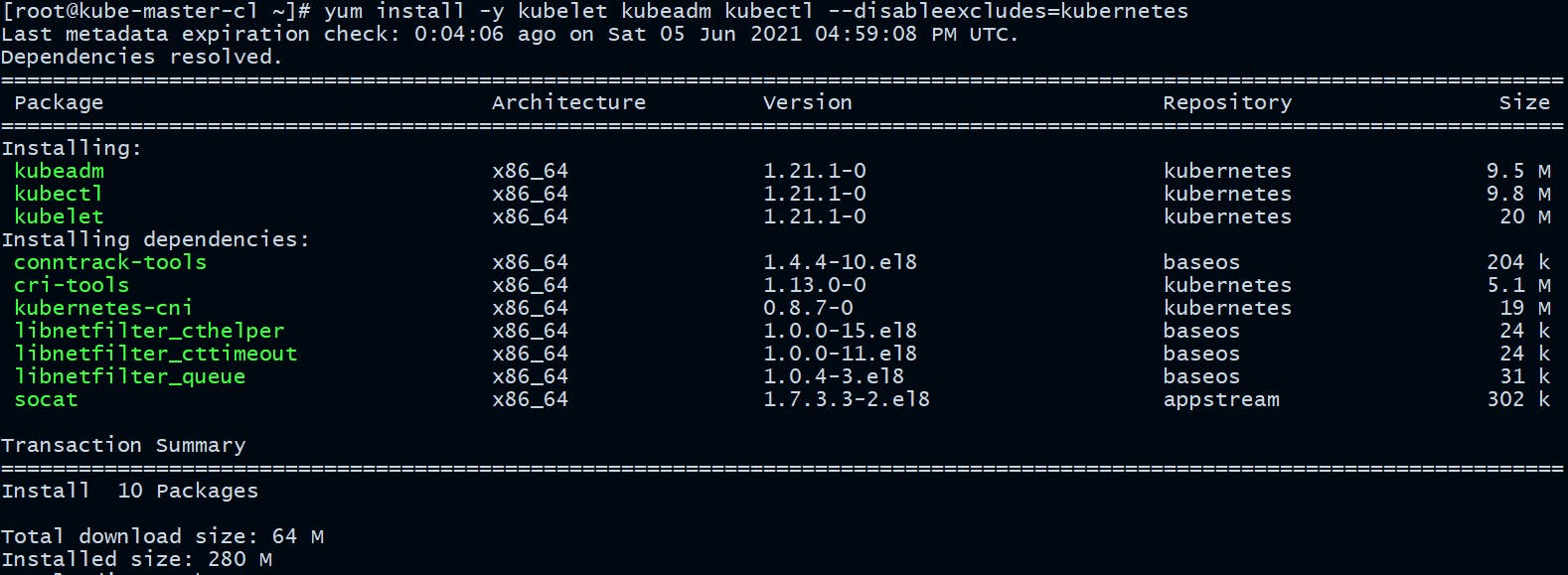

STEP 3: Install kubelet, kubeadm, kubectl

These 3 basic packages are required to be able to use Kubernetes.

Install the following packages on each node:

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

Verify the package installation and versions:

kubectl version:

[root@kube-master-cl ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.1", GitCommit:"5e58841cce77d4bc13713ad2b91fa0d961e69192", GitTreeState:"clean", BuildDate:"2021-05-12T14:18:45Z", GoVersion:"go1.16.4", Compiler:"gc", Platform:"linux/amd64"}

kubeadm version:

[root@kube-master-cl ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.1", GitCommit:"5e58841cce77d4bc13713ad2b91fa0d961e69192", GitTreeState:"clean", BuildDate:"2021-05-12T14:17:27Z", GoVersion:"go1.16.4", Compiler:"gc", Platform:"linux/amd64"}

kubelet version:

[root@kube-master-cl ~]# kubelet --version

Kubernetes v1.21.1

You need to run this on all the nodes (Master + Workers)

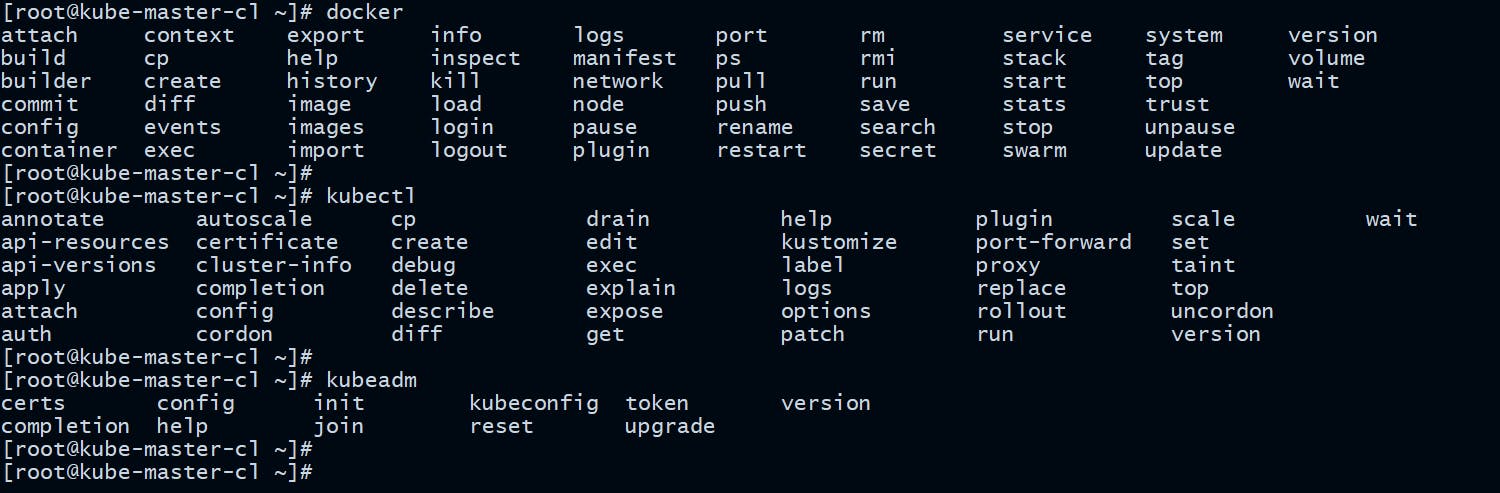

STEP 4: Enable bash completion

Enable bash completions on all the nodes so that you no need to manually type all the commands every time, the tab would do that for you.

yum install bash-completion -y

curl https://raw.githubusercontent.com/docker/docker-ce/master/components/cli/contrib/completion/bash/docker -o /etc/bash_completion.d/docker.sh

echo 'source <(kubectl completion bash)' >>~/.bashrc

kubectl completion bash >/etc/bash_completion.d/kubectl

echo 'source <(kubeadm completion bash)' >>~/.bashrc

kubeadm completion bash >/etc/bash_completion.d/kubeadm

logout

You need to run this on all the nodes (Master + Workers)

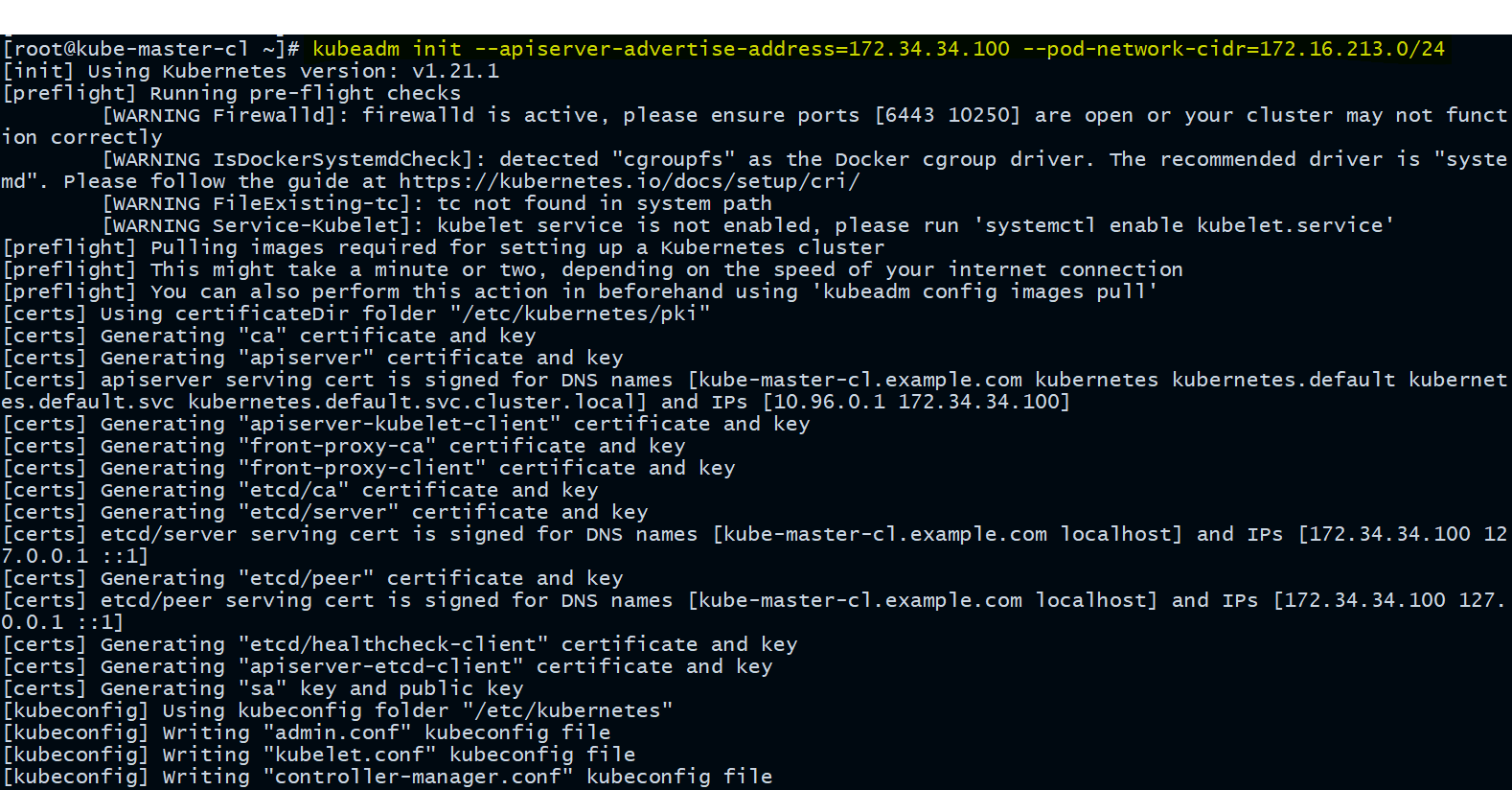

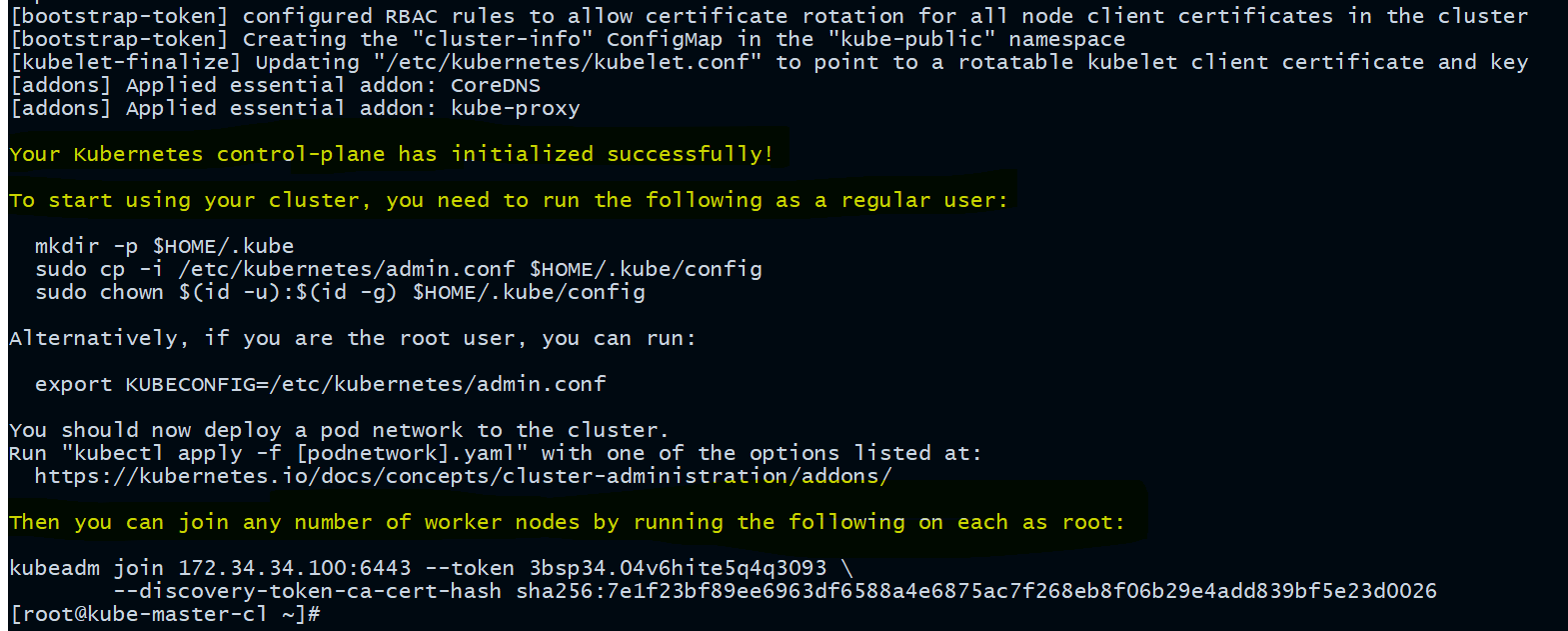

STEP 5: Create Cluster with kubeadm

It's time to initialize the cluster by executing the following command:

[root@kube-master-cl ~]# kubeadm init --apiserver-advertise-address=172.34.34.100 --pod-network-cidr=172.16.213.0/24

You need to run this on Master node

Its always good to set

--apiserver-advertise-addressspecifically while starting the Kubernetes cluster using kubeadm. If not set the default network interface will be used. The--apiserver-advertise-addresscan be used to set the advertise address for this particular control-plane node's API server.Same with

--pod-network-cidrSpecify range of IP addresses for the pod network. If set, the control plane will automatically allocate CIDRs for every node.For more options please refer this link.

Manage cluster as a regular user:

In the above kubeadm init command output you can clearly see that to start using your cluster, you need to run the following commands as a regular user. The command for this step is given in the output of the previous command.

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

You need to run this on Master node

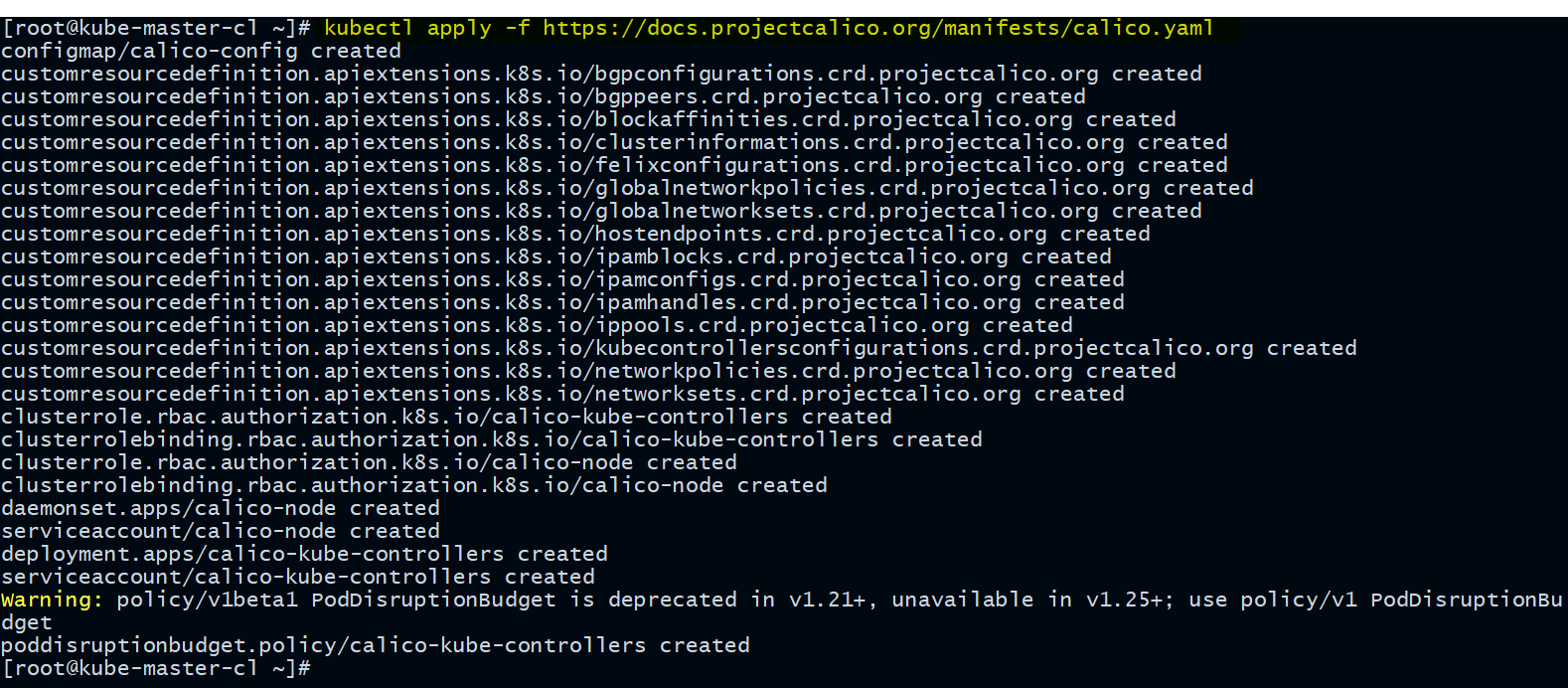

STEP 6: Setup Cluster Networking

We need to deploy the pod's internal network so that containers on different nodes can communicate with each other. POD network is the overlay network between the worker nodes.

An overlay network is a virtual network of nodes and logical links, which are built on top of an existing network.

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

You need to run this on Master node

Calico is an open source networking and network security solution for containers, virtual machines, and native host-based workloads.

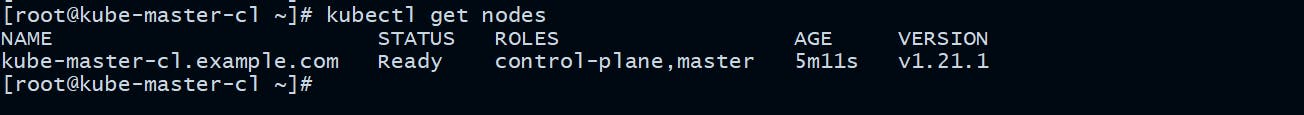

Now you can check the cluster status.

[root@kube-master-cl ~]# kubectl get nodes

Great! Our Control Plane Node aka Master node is now in ready state.

STEP 7: Configure Node IP

We need to configure node IP in /etc/default/kubelet file on all the individual nodes.

[root@kube-master-cl ~]# touch /etc/default/kubelet

[root@kube-master-cl ~]# echo "KUBELET_EXTRA_ARGS=--node-ip=172.34.34.100" > /etc/default/kubelet

On workers ->

[root@kube-worker-cl1 ~]# touch /etc/default/kubelet

[root@kube-worker-cl1 ~]# echo "KUBELET_EXTRA_ARGS=--node-ip=172.34.34.101" > /etc/default/kubelet

[root@kube-worker-cl2 ~]# touch /etc/default/kubelet

[root@kube-worker-cl2 ~]# echo "KUBELET_EXTRA_ARGS=--node-ip=172.34.34.102" > /etc/default/kubelet

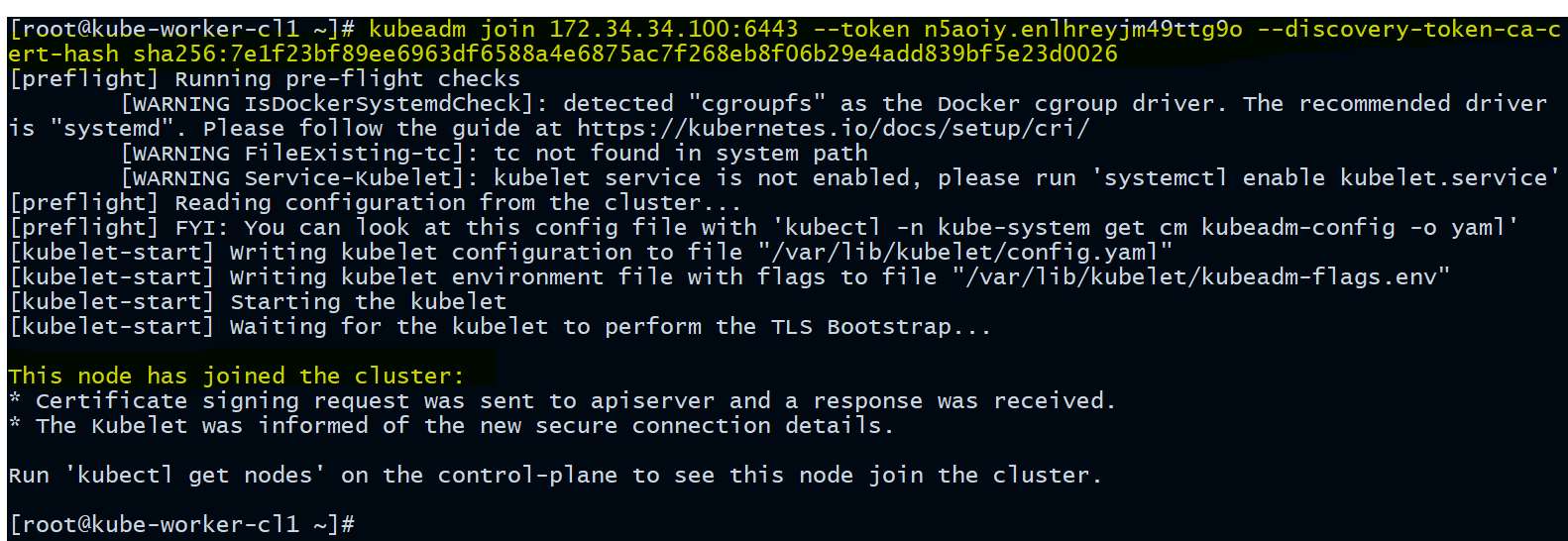

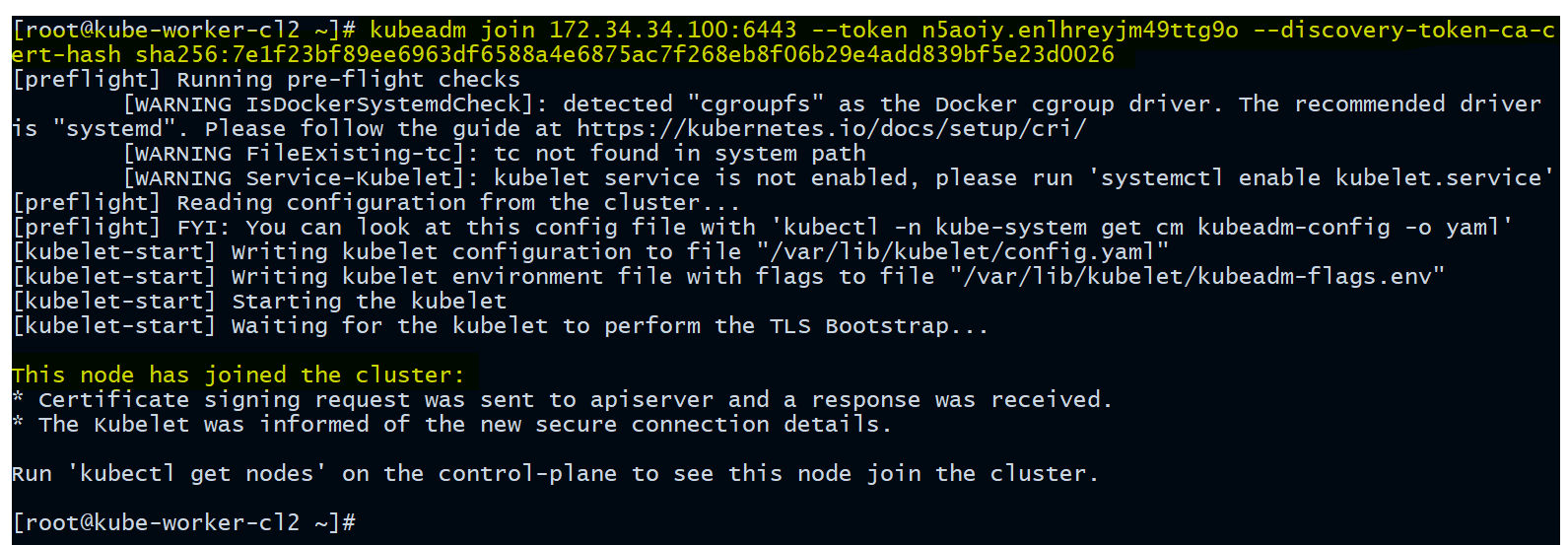

STEP 8: Join Worker Nodes to Cluster

It's time to join our worker nodes to the cluster.

As indicated in Step 5 output, you can use the kubeadm join command on each worker node to connect it to the cluster.

Run this command on each worker node.

[root@kube-worker-cl1 ~]# kubeadm join 172.34.34.100:6443 --token n5aoiy.enlhreyjm49ttg9o --discovery-token-ca-cert-hash sha256:7e1f23bf89ee6963df6588a4e6875ac7f268eb8f06b29e4add839bf5e23d0026

[root@kube-worker-cl2 ~]# kubeadm join 172.34.34.100:6443 --token n5aoiy.enlhreyjm49ttg9o --discovery-token-ca-cert-hash sha256:7e1f23bf89ee6963df6588a4e6875ac7f268eb8f06b29e4add839bf5e23d0026

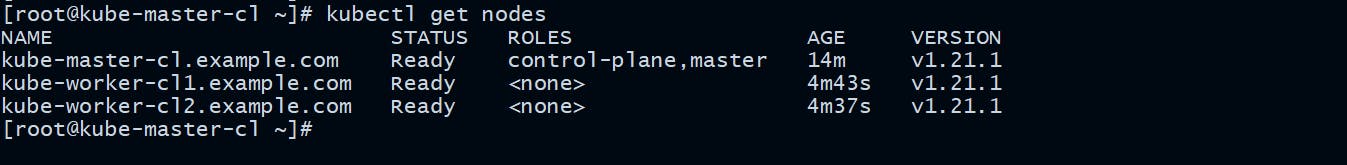

Now check the cluster status again ->

[root@kube-master-cl ~]# kubectl get nodes

[root@kube-master-cl ~]# kubectl get nodes -o wide

Yo can see all the worker nodes has successfully joined the cluster and are ready to serve workloads.

If you somehow miss the output from the

kubeadm initcommand, nothing to worry about as you can always run following command to generate the kubeadm join token again.[root@kube-master-cl ~]# kubeadm token create --print-join-command kubeadm join 172.34.34.100:6443 --token ad43oc.j3ftiz3931d3k7n2 --discovery-token-ca-cert-hash sha256:7e1f23bf89ee6963df6588a4e6875ac7f268eb8f06b29e4add839bf5e23d0026

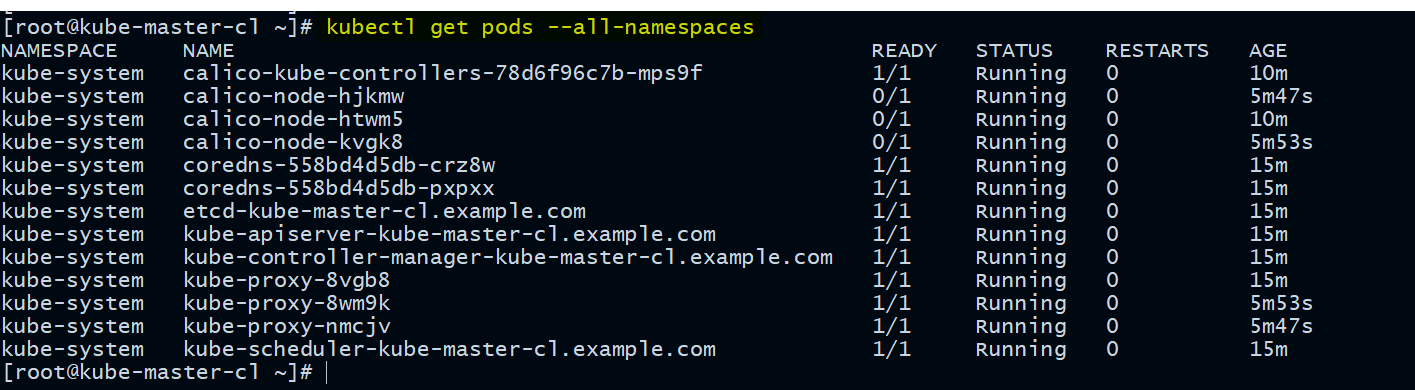

Verify all the pods running in all name spaces

[root@kube-master-cl ~]# kubectl get pods --all-namespaces

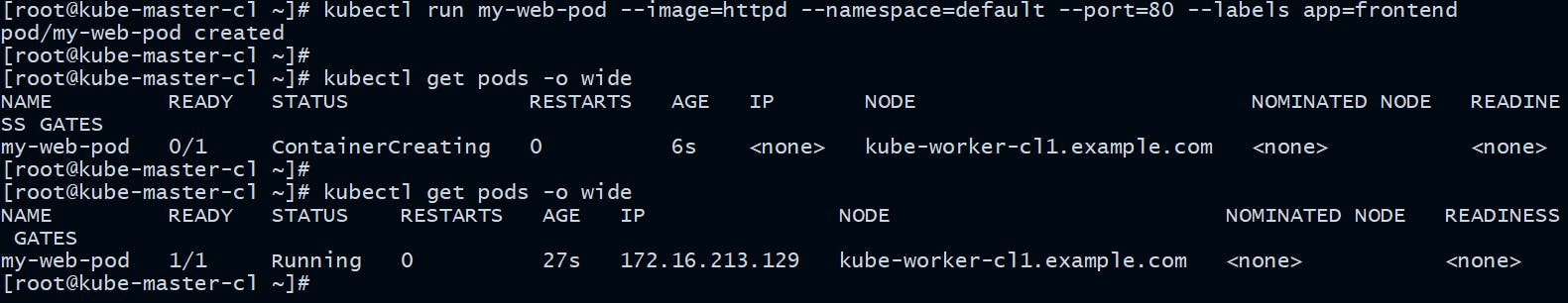

STEP 9: Test the cluster by creating a test pod

Run the following command to create a test pod on our Kubernetes cluster. Don't worry about the commands I am using here, we will learn about all these in detail in upcoming articles.

kubectl run my-web-pod --image=httpd --namespace=default --port=80 --labels app=frontend

Verify the pod status

kubectl get pods -o wide

Congratulations!! Now you have a fully functional Production ready Kubernetes cluster up and running on CentOS Linux.

Hope you like the tutorial. Stay tuned and don't forget to provide your feedback in the response section.

Happy Learning!