There are two type of replication strategies in Kubernetes ReplicaSets and ReplicationController.

In this article I am going to explain about the duties which ReplicaSets and ReplicationControllers performs within a Kubernetes cluster.

Before I start exploring these concepts let me answer a question which might arise in your mind that why we need the replication at first place?

Why is replication required?

The main purpose of using replication is to achieve reliability, failover, load balancing, and scaling. Replication ensures that the pre-defined pods always exists.

Let us understand this by an example ->

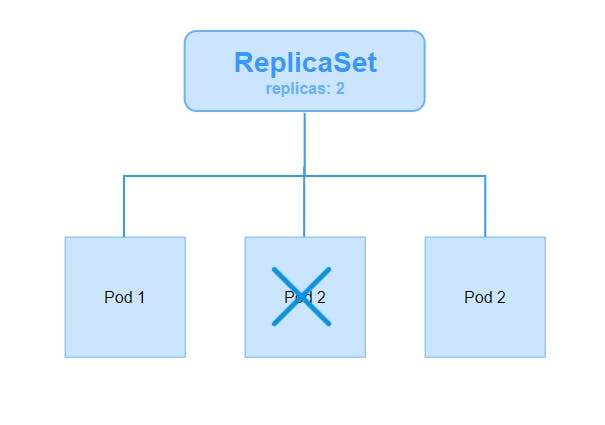

Let's assume there is a pod carrying our application. For some reason our application gets crashed and the pod fails. Now the end users will no longer be able to access our application.

To prevent users from losing access to our application we would like to have more than one instances of our application running. That way if one pod fails we still have our application running on the other one. The replication controller helps us run multiple instances of a single pod in the Kubernetes cluster. Thus providing high availability.

What if I plan to have a single pod application!

If I plan to have a single pod to carry my application can I use replication controller?

Of course, you can use it. Even if you have a single pod the replication controller can help by automatically bringing up a new pod when the existing one fails. Thus the replication controller ensures that the specified number of pods are running at all times.

Another reason we need a replication controller is to create multiple pods to share the load across them. Simple scenario is if we have a single pod serving a set of users, when the number of users increase we deploy additional pods to balance the load across the two pods if the demand further increases and if we were to run out of resources on the first node we could deploy additional pods across the other worker nodes in the cluster.

The replication controller spans across multiple worker nodes in the cluster. It helps us balance the load across multiple pods on different nodes as well as scale our application when the demand increases.

It’s important to note that there are two similar terms Replication Controller and ReplicaSet.

What is ReplicationController?

A ReplicationController ensures that a specified number of pod replicas are running at any given time. In other words, a ReplicationController makes sure that a pod or a homogeneous set of pods is always up and available.

What is ReplicaSets ?

A ReplicaSet's purpose is to maintain a stable set of replica Pods running at any given time. As such, it is often used to guarantee the availability of a specified number of identical Pods. The ReplicaSets are also known as next generation Replication Controller.

ReplicaSets checks whether the target pod is already managed by another controller as well (like a Deployment or another ReplicaSet).

Difference between ReplicaSets and ReplicationController

Replica Set and Replication Controller do almost the same thing. Both of them ensure that a specified number of pod replicas are running at any given time.

The difference comes with the usage of selectors to replicate pods. Replica Set use Set-Based selectors which gives more flexibility while replication controllers use Equity-Based selectors.

ReplicaSet example ->

ReplicaSets supports Set-Based selectors. Three type of operators used in set-based selectors are -->

innotinexists(only the key identifier)

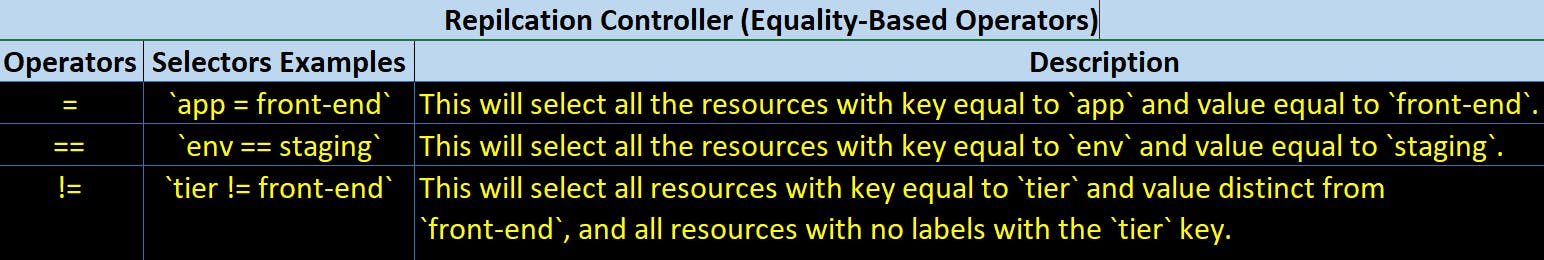

ReplicationController example ->

ReplicaSets supports equality based selectors. Three type of operators used in equality based selectors are -->

===!=

The first two represent equality (and are simply synonyms), while the latter represents inequality.

Creating a Replication Controller

Now I will demonstrate how we can create Replication Controller using the yaml manifest file.

root@kube-master:~/replication# cat rc-demo.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: lco-httpd-rc-demo

spec:

replicas: 3

selector:

app: httpd

tier: qa

template:

metadata:

name: httpd

labels:

app: httpd

tier: qa

spec:

containers:

- name: httpd-container

image: httpd

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

The above yaml manifest file will create a ReplicationController with 3 replica pods running all the time.

We will learn more about label's and selector's in our upcoming articles.

Run the following command to create the ReplicationController resource.

root@kube-master:~/replication# kubectl apply -f rc-demo.yml

replicationcontroller/lco-httpd-rc-demo created

Verify the above resource creation:

root@kube-master:~/replication# kubectl get replicationcontrollers lco-httpd-rc-demo

NAME DESIRED CURRENT READY AGE

lco-httpd-rc-demo 3 3 1 25s

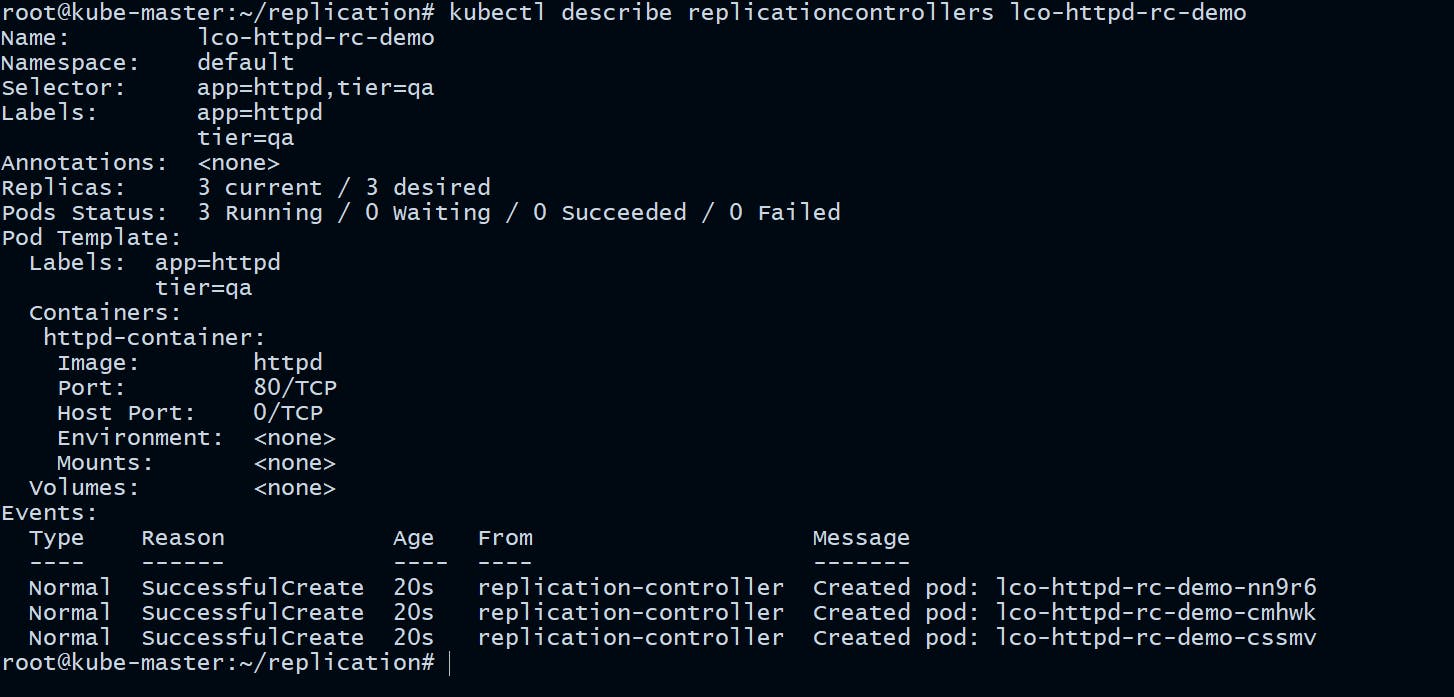

Describe the ReplicationController we created

root@kube-master:~/replication# kubectl describe replicationcontrollers lco-httpd-rc-demo

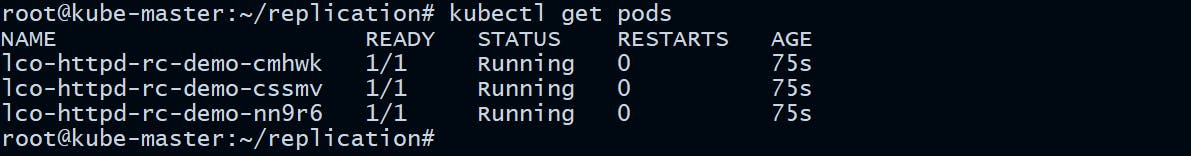

Verify the pods running:

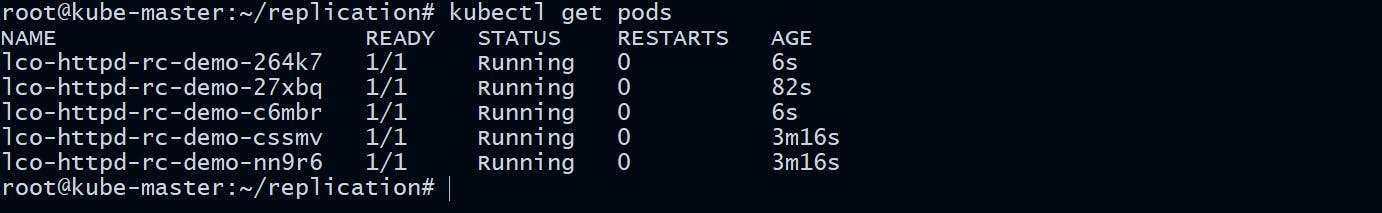

root@kube-master:~/replication# kubectl get pods

Let's verify the ReplicationController's functionality by deleting a running pod controlled by replication controller manually and the replication controller will automatically spawn a new pod to meet the desired state.

Here’s an example:

root@kube-master:~/replication# kubectl delete pod lco-httpd-rc-demo-cmhwk

pod "lco-httpd-rc-demo-cmhwk" deleted

Verify the status of running pods :

root@kube-master:~/replication# kubectl get pods

The new pod (lco-httpd-rc-demo-27xbq) has just now been created with a different pod name to maintain the replica count of 3 which we have specified in our configuration.

Within the specification file if we don't put the selector, the replication controller will automatically configure it with the labels of pod, as the pod labels must match the replication controller selectors, otherwise the pods will move out of the scope of replication controller.

Scaling up a Replication Controller

root@kube-master:~/replication# kubectl scale replicationcontroller --replicas=5 lco-httpd-rc-demo

replicationcontroller/lco-httpd-rc-demo scaled

Verify the pods running now:

root@kube-master:~/replication# kubectl get pods

You can see above that two new pods have been added.

Deleting Replication Controller

root@kube-master:~/replication# kubectl delete replicationcontrollers lco-httpd-rc-demo

replicationcontroller "lco-httpd-rc-demo" deleted

Verify if the pods are deleted.

root@kube-master:~/replication# kubectl get pods

No resources found in default namespace.

Creating a ReplicaSet

Time to demonstrate the creation of Replicaset using the yaml manifest file. To be able to create new pods if necessary, the ReplicaSet definition includes a template part containing the definition for new pods.

root@kube-master:~/replication# cat rs-demo.yml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: lco-httpd-rs-demo

spec:

replicas: 3

selector:

matchLabels:

env: qa

matchExpressions:

- { key: tier, operator: In, values: [front-end] }

template:

metadata:

name: httpd

labels:

env: qa

tier: front-end

spec:

containers:

- name: httpd-container

image: httpd

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

Above is the manifest file which will create the defined ReplicaSet and the Pods that it manages when submitted to Kubernetes cluster.

Make sure you do not use a label that is already in use by another controller. Otherwise, another ReplicaSet or Controller may acquire the pod(s) first. Also notice that the labels defined in the pod template cannot be different than those defined in the matchLabels part.

root@kube-master:~/replication# kubectl apply -f rs-demo.yml

replicaset.apps/lco-httpd-rs-demo created

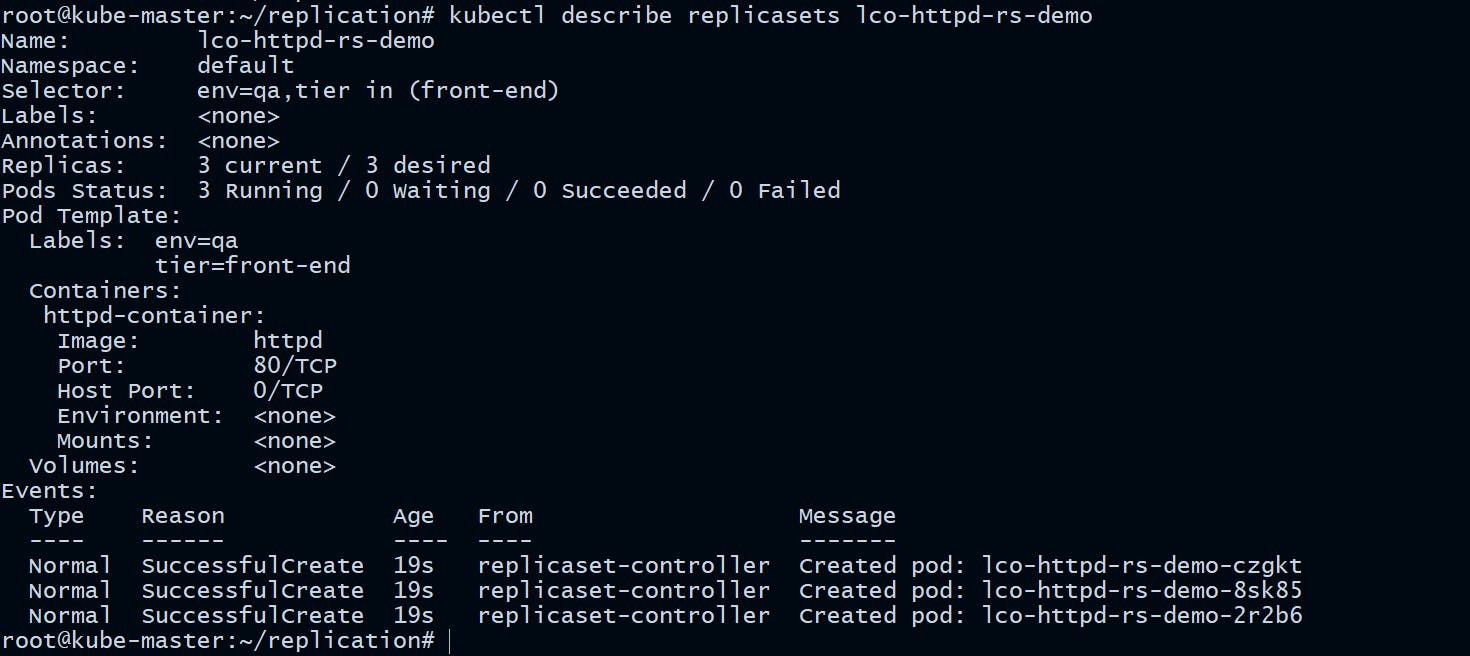

Describe the ReplicaSet we created

root@kube-master:~/replication# kubectl describe replicasets lco-httpd-rs-demo

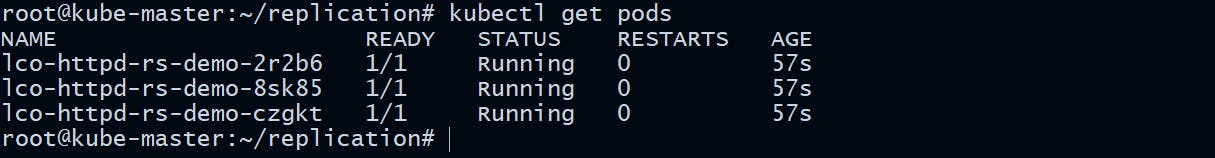

Verify the running pods:

root@kube-master:~/replication# kubectl get pods

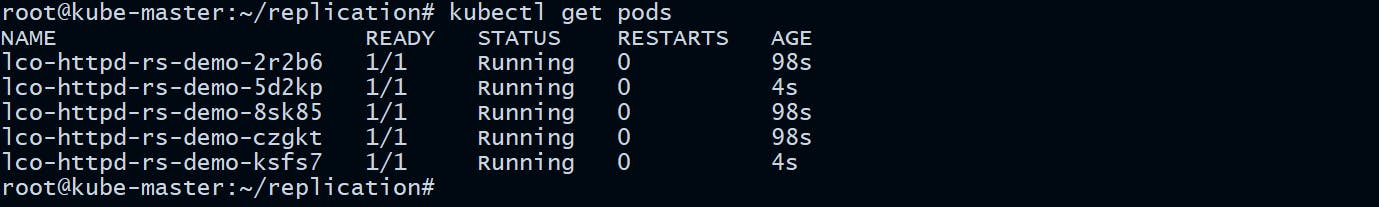

Scaling up a ReplicaSet

root@kube-master:~/replication# kubectl scale replicaset --replicas=5 lco-httpd-rs-demo

replicaset.apps/lco-httpd-rs-demo scaled

Verify the running pods:

root@kube-master:~/replication# kubectl get pods

Configure Auto Scaling on a ReplicaSet

We can configure autoscaling for ReplicaSets to scale upto the maximum number of pods defined according to the CPU load that the node is having. Run the following command to enable autoscaling for our ReplicaSet :

root@kube-master:~/replication# kubectl autoscale replicaset --max=5 lco-httpd-rs-demo

horizontalpodautoscaler.autoscaling/lco-httpd-rs-demo autoscaled

After setting above metrics you can not scale the Replicaset beyond 5 pods.

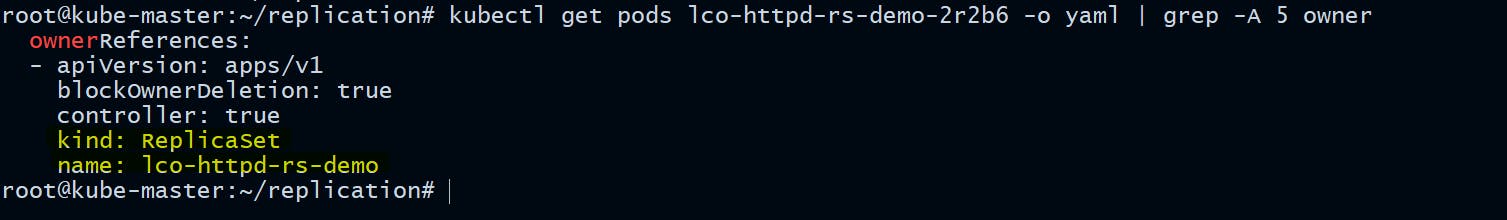

Find out the the owner of running pods

In your production environment where you may have thousands of pods running, you may want to verify that a particular pod is actually managed by this ReplicaSet and not. By querying the pod, you can get this info:

root@kube-master:~/replication# kubectl get pods lco-httpd-rs-demo-nbgp5 -o yaml | grep -A 5 owner

Deleting a ReplicaSet

A ReplicaSet can be deleted by issuing a kubectl command like the following:

root@kube-master:~/replication# kubectl delete replicasets lco-httpd-rs-demo

replicaset.apps "lco-httpd-rs-demo" deleted

The above command will delete the ReplicaSet and all the managed pods.

But lets say you just want to delete the ReplicaSet resource, not the pods it manages. Run the following command:

root@kube-master:~/replication# kubectl delete replicasets lco-httpd-rs-demo --cascade=false

replicaset "lco-httpd-rs-demo" deleted

Verify if the pods are still running or not:

root@kube-master:~/replication# kubectl get pods

Verify if the ReplicaSet is deleted:

root@kube-master:~/replication# kubectl get replicasets

No resources found in default namespace.

One should never create a bare pod with a label that matches the selector of a ReplicaSet controller unless its template matches the pod definition. Because once you create it the ReplicaSet controller will automatically adopt it. And if the newly created bare pod's specifications are different then the controller is programmed to use (for eg. container image is different) then you will have consequences of losing data.

The recommended method is to always use a controller like a ReplicaSet or a Deployment to create and maintain your pods.

This is all about Kubernetes ReplicaSet and Replication Controllers.

Hope you like the tutorial. Stay tuned and don't forget to provide your feedback in the response section.

Happy Learning!